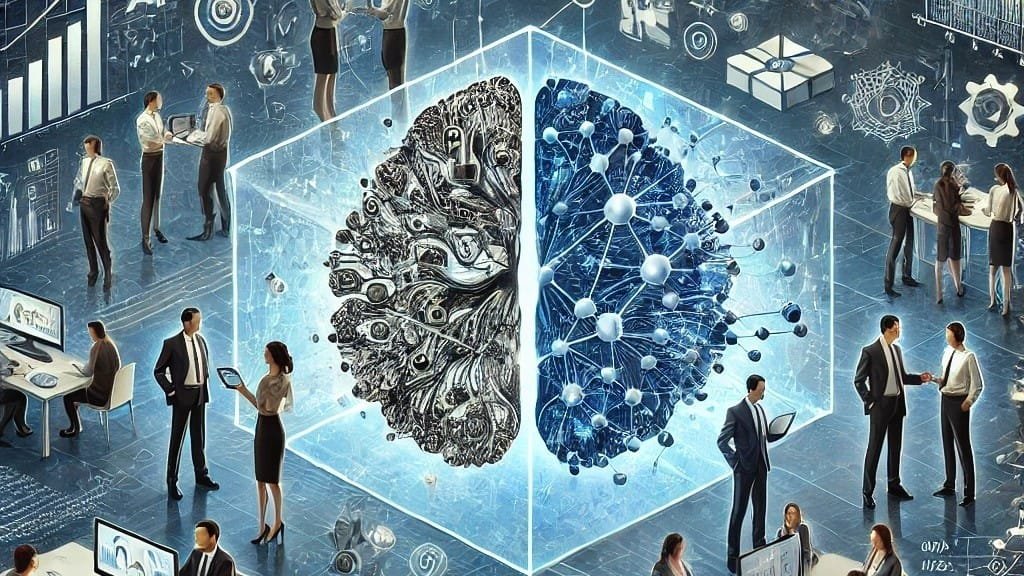

As machine learning becomes deeply embedded in healthcare, finance, cybersecurity, and everyday applications, one major challenge has emerged: we need to understand how AI makes decisions.

This is where Explainable AI (XAI) comes in — a framework that makes machine learning models transparent, interpretable, and trustworthy.

XAI helps answer questions like:

- Why did the model reject this loan?

- Why was this tumor classified as malignant?

- Why did the algorithm flag this user as suspicious?

In a world driven by automation and data, explainability isn’t optional — it’s essential.

Why Explainable AI Matters

- Builds trust between humans and algorithms

- Helps developers debug and improve models

- Ensures fairness and reduces bias

- Required for legal compliance in many industries

- Enables responsible and transparent AI adoption

If we can’t understand AI decisions, we can’t fully trust them.

Key Components of Explainable AI

1. Transparency

The model’s structure, data flow, and decision logic must be clear.

Example: Rule-based systems or decision trees show exactly how decisions are formed.

2. Interpretability

Humans should be able to understand the “why” behind predictions — even in complex neural networks.

Tools like SHAP, LIME, and feature importance charts help simplify outputs.

3. Accountability

XAI ensures each decision can be traced back, reviewed, and justified.

Critical for sectors like law, hiring, healthcare, and credit scoring.

How XAI Improves Machine Learning Models

1. Detects & Removes Bias

XAI highlights biases in training data, helping developers remove unfair patterns.

2. Boosts Model Performance

Understanding which features matter helps refine and optimize algorithms.

3. Enhances User Trust

Clear explanations make users confident in ML-driven systems.

4. Supports Ethical AI

Provides transparency, fairness, and compliance in sensitive domains.

Popular Explainable AI Techniques

LIME (Local Interpretable Model-Agnostic Explanations)

Explains how individual predictions are made, even for black-box models.

SHAP (SHapley Additive exPlanations)

Shows how each feature contributes to the model’s decision.

Feature Importance Visualization

Ranks which variables influence predictions the most.

Counterfactual Explanations

Answers: “What needs to change for the decision to be different?”

Partial Dependence Plots (PDPs)

Shows how features affect outcomes across different values.

Industries That Rely on Explainable AI

| Industry | Why XAI Is Essential |

|---|---|

| Healthcare | Doctors must trust diagnoses and treatment decisions |

| Finance | Loan approvals and risk scores require justification |

| Cybersecurity | Analysts need to understand flagged anomalies |

| Human Resources | Prevents bias in automated hiring |

| Law & Government | Ensures transparency in automated decision systems |

Best Practices for Implementing Explainable AI

- Start with ** interpretable models** where possible

- Use XAI tools for deep learning or complex architectures

- Validate explanations with domain experts

- Maintain human oversight in high-risk decisions

- Keep a focus on fairness, bias detection, and privacy

Contact IT Artificer

Website: itartificer.com

Email: info@itartificer.com

Phone: 0333-9296314